What's new with AI whistleblowers?

A few weeks ago, I published an article with AI Policy Bulletin, “Governments Need to Protect AI Industry Whistleblowers: Here's How.” Thanks to the response from that piece of work, I was looped into various efforts to enact U.S. state legislation to protect whistleblowers at frontier AI companies (thanks to Secure AI Project and Encode).

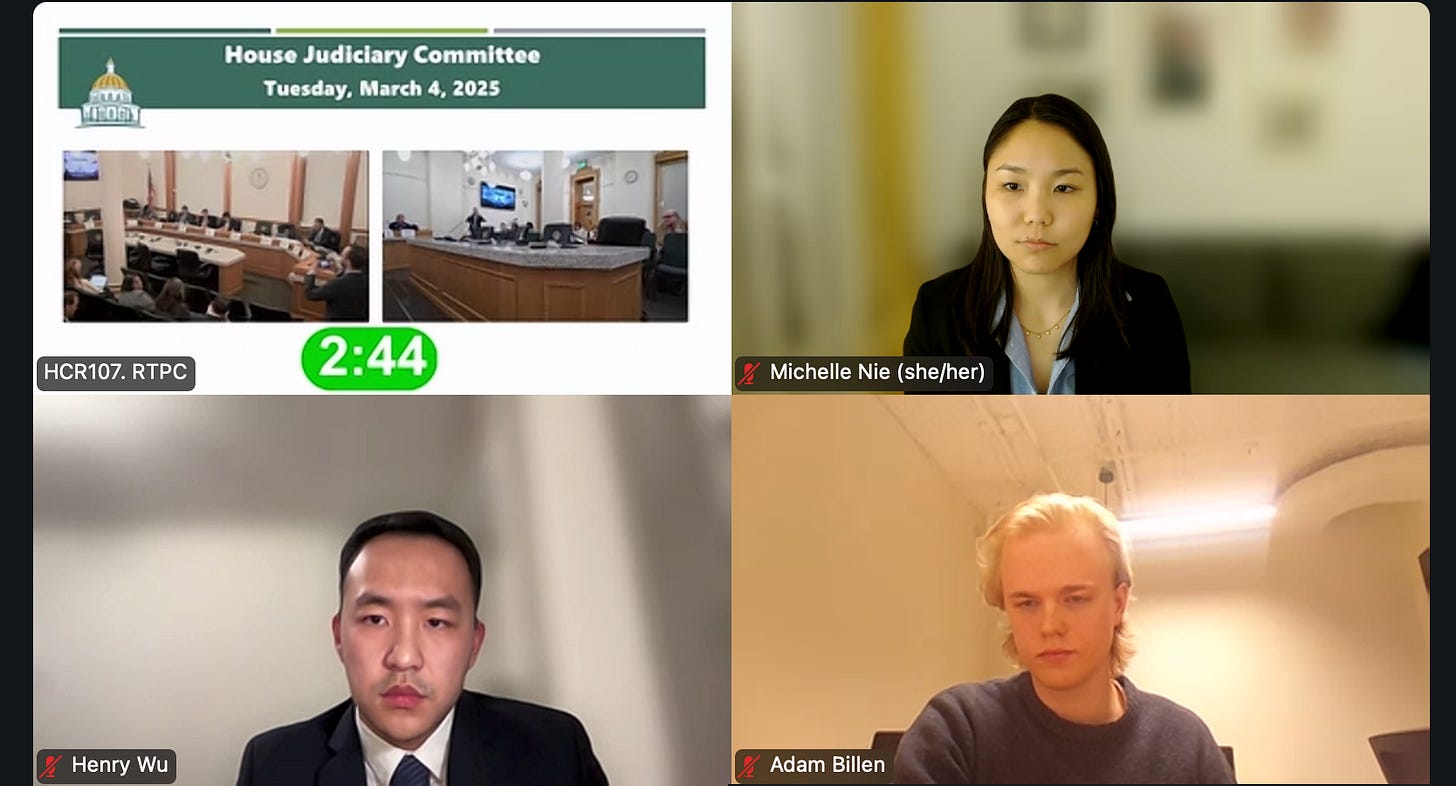

Last week, I had the opportunity to testify in support of Colorado House Bill 25-1212 on public safety protection from artificial intelligence systems. Introduced by Colorado Representatives Manny Rutinel and Matt Soper, this bipartisan bill would enact sweeping whistleblower protections for Colorado-based workers (employees and contractors) at leading AI companies. I was proud to support an effort from Colorado, a state for which I’ve worked on multiple policy programs, and one to be sure to follow for forward-leaning AI policy developments.

The bill passed the House Judiciary Committee, and while it still has a long way to go until it is enacted into law, I’m hopeful of what the victory signals: that AI whistleblower protection is a bipartisan issue that everyone can get behind.

Read on below for a copy of my written testimony as well as a roundup of states implementing legislation to protect AI whistleblowers.

Proposed State Bills on Protecting AI Whistleblowers

In addition to Colorado, state legislatures in California, New York, Massachusetts, and Illinois have introduced bills to protect whistleblowers in AI. All bills focus on protecting employees (including contractors, subcontractors, and unpaid advisors) who report potential harms from advanced AI systems, though they vary in specifics around coverage, remedies, and reporting mechanisms.

California: CalCompute: Foundation Models: Whistleblowers (SB-53). Introduced by Senator Scott Wiener of SB-1047 fame, this bill would be applicable to employees who work for a developer who have trained at least one foundation model with computational power costing at least $100 million. It would protect disclosures to internal employees or state and federal authorities if employees believe the developer's activities pose a critical risk or the developer has made false or misleading statements about critical risk management. Importantly, the bill would provide a robust process for internal reporting, including requiring employers to create internal reporting channels for anonymous disclosures, provide monthly updates on investigation status, and share disclosures with management on a quarterly basis. Although temporary or preliminary injunctive relief is available to employees whose employers are in violation, no specific monetary damages are mentioned.

New York: Responsible AI Safety and Education (RAISE) Act (A06453). Introduced by Assemblymember Alex Bores, this bill targets “large developers” that have trained at least one frontier model costing at least $5 million and have spent over $100 million in total compute costs. It protects employees who make disclosures related to their belief that their employer’s activities pose “unreasonable or substantial risk of critical harm, regardless of the employer’s compliance with applicable law.” Employees who are retaliated against may receive civil penalties up to $10,000 for each violation, and they may also petition the court for temporary or preliminary injunctive relief. However, this bill has a relatively weak provision for internal reporting beyond requiring employers to post notices about their employees’ rights.

Massachusetts: An Act promoting economic development with emerging artificial intelligence models and safety (S.37). Introduced by Senator Barry Finegold, this bill applies to developers who have trained at least one model costing at least $100 million in compute. It protects disclosures to the Attorney General for believed noncompliance with requirements or unreasonable risk of causing critical harm. Employees who are retaliated against have a 2-year window to file a civil action, for which available remedies may include restraining orders, reinstatement to the same or equivalent position, full restoration of benefits, payment of attorneys’ fees, and compensation at three times the lost remuneration. Similar to the California bill, employers would be required to provide internal reporting channels for anonymous disclosures, provide monthly updates on investigation status, and share disclosures with management quarterly.

Illinois: Artificial Intelligence Safety and Security Protocol Act (HB3506): Introduced by Representative Daniel Didech, this bill would also cover developers who have trained at least one foundation model with compute costing at least $100 million. It also protects disclosures to the Attorney General when an employee has reasonable cause to believe the information indicates the developer's activities pose unreasonable or substantial critical risk. Employers in violation of the rule may face civil penalties up to $1,000,000 for company violations, but it is not specified whether these fines would go to whistleblowers directly. As with California and Illinois, the bill would require employers to provide anonymous internal reporting channels, share monthly updates on investigations, and share disclosures with officers and directors who do not have conflicts of interest at least quarterly.

In a period of time where it will be difficult, if not outright impossible, to pass Federal legislation on AI safety, I’m hopeful that these state-led efforts will protect whistleblowers and incentivize them to speak up about impending harms, enabling internal and external stakeholders to take rapid action to subvert large-scale disasters. As mentioned, these bills tend to have strong bipartisan support, apply only to the top frontier AI labs, and help mitigate the often enormous risks posed by employees who raise concerns.

Written Testimony in Support of House Bill 25-1212

Below is the full text of my written testimony for Colorado’s HB25-1212.

Dear Chair Mabrey and Vice Chair Carter,

I am writing to express my strong support of HB25-1212 on public safety protection from artificial intelligence systems. As a consultant and researcher who has worked on multiple projects for the State of Colorado, including Opportunity Now Colorado and the Colorado Middle Income Housing Authority, and who has studied best practices for whistleblower protections for AI researchers, I believe this bill includes many robust protections for AI company workers that could be crucial in incentivizing them to speak up in good faith about risks to the public interest.

Whistleblower protection laws specific to AI are needed more than ever because current whistleblower protections generally apply to activity that is illegal. Given that the rapid advancement of AI technology greatly outpaces our ability to regulate it and establish clear legal boundaries, robust interim measures such as HB25-1212 are essential. The bill’s protection of whistleblowing with respect to “a substantial risk to public safety or security, even if the developer is not out of compliance with any law” sends a powerful message that acknowledges the general applications of these systems and their far-reaching impacts on society.

Furthermore, the creation of internal reporting channels should be commended as a best practice that will ensure that relevant information gets into the hands of appropriate stakeholders as efficiently as possible. Studies show that as much as 89% of whistleblowers report only internally. Internal reporting is often the best way to get information to relevant decision-makers who can contribute to the early and effective resolution of concerns.

Another strong feature of the bill is the provision of an anonymous reporting process, which will alleviate concerns about the extreme personal risk involved in whistleblowing. According to one study, 69% of surveyed whistleblowers lost their jobs or were forced to retire, and 64% were blacklisted from getting another job in their field. In an industry where there are only a handful of companies at the frontier of AI development, being blacklisted from one’s employer could mean never getting hired again. Procedures to allow for confidential and anonymous reporting could shield workers from the often enormous reputational and financial costs of whistleblowing. Monetary relief for workers whose employers violate the bill will also act as financial insurance against the act of whistleblowing.

Lastly, the prohibition of contracts that limit workers from disclosing good faith concerns about public safety or security, such as through non-disparagement agreements, is important not only to strengthen whistleblower protections but also to set company standards. Over five hundred former OpenAI employees, many of whom have had safety or security concerns, have signed non-disparagement clauses under the threat of their equity being revoked.

History has shown that we cannot count on corporations to do the “right thing.” Indeed, voluntary policies and procedures can only go so far — as current and former employees of OpenAI, DeepMind, and Anthropic warned in an open letter, companies have “strong financial incentives to avoid effective oversight.”

My research has shown that many workers at leading AI companies have a genuine interest in furthering the potential of groundbreaking technologies, but many have valid safety concerns. Many have felt that they had nowhere to turn to due to their companies’ restrictive policies. In light of these concerns, by cementing these whistleblower protections into law, Colorado can set a powerful standard for whistleblower legislation specific to AI.

I am always impressed by how forward-thinking Colorado is on many issues, including the risks posed by artificial intelligence. I believe in the power of Colorado to not only become a pioneer in safe innovation in AI, but also to set a strong precedent in protecting workers at the forefront of this innovation.

Thank you for your consideration.